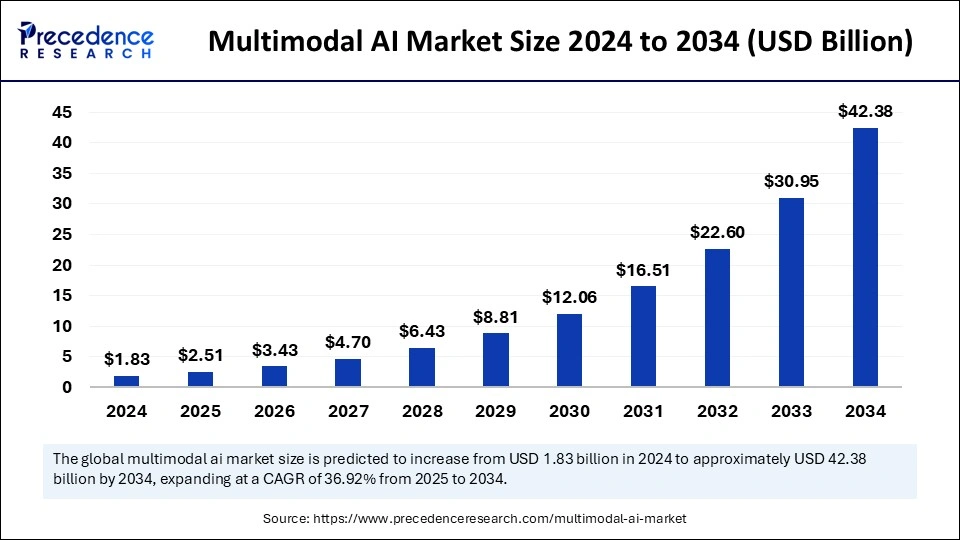

Multimodal AI Market Size to Reach USD 42.38 Billion by 2034

Multimodal AI Market Size and Growth

The global multimodal AI market size was estimated at USD 1.83 billion in 2024 and is expected to reach around USD 42.38 billion by 2034, growing at a CAGR of 36.92% from 2025 to 2034.

Get Sample Copy of Report@ https://www.precedenceresearch.com/sample/5728

Key Points

-

North America secured the highest market share of 48% in 2024.

-

Asia Pacific is projected to achieve the fastest market growth in the future.

-

The software segment led with a dominant 66% share in 2024.

-

The services segment is expected to expand at a CAGR of 38% over the forecast period.

-

Text data remained the top data modality segment in 2024.

-

The speech & voice data segment is poised to register the fastest growth.

-

The media & entertainment industry captured the largest end-use share in 2024.

-

BFSI is anticipated to exhibit robust growth in the years ahead.

-

Large enterprises dominated the market landscape in 2024.

-

SMEs are likely to witness notable expansion moving forward.

AI Transforming the Multimodal AI Landscape

-

Next-Level Human-Machine Interaction – AI allows machines to process and respond to various sensory inputs simultaneously, improving communication in robotics and virtual assistants.

-

Accelerated Growth of Smart Devices – AI enhances multimodal capabilities in smart home systems, wearables, and IoT devices, making them more adaptive and intelligent.

-

Advanced Autonomous Systems – AI-powered multimodal perception enables self-driving cars, drones, and robotic systems to interpret complex real-world environments more effectively.

-

Real-Time Multimodal Translation – AI-driven language models integrate speech, text, and images for real-time, accurate translations, enhancing cross-language communication.

-

Empowering Augmented and Virtual Reality – AI enhances multimodal AR/VR experiences by integrating visual, auditory, and gesture-based interactions for more immersive digital environments.

Also Read: E-Learning For Pet Services Market

Market Scope

| Report Coverage | Details |

| Market Size by 2034 | USD 42.38 Billion |

| Market Size in 2025 | USD 2.51 Billion |

| Market Size in 2024 | USD 1.83 Billion |

| Market Growth Rate from 2025 to 2034 | CAGR of 36.92% |

| Dominated Region | North America |

| Fastest Growing Market | Asia Pacific |

| Base Year | 2024 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Component, Data Modality, End use, Enterprise Size, and Regions |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America and Middle East |

Market Dynamics

Drivers

The increasing demand for AI-driven human-machine interactions is a major driver for the multimodal AI market. Businesses and consumers are seeking more natural and intuitive communication with digital systems, leading to the adoption of AI models that integrate multiple data types, such as text, speech, images, and gestures.

Additionally, the rapid advancements in deep learning and neural networks have enabled more sophisticated multimodal AI applications across industries, from healthcare and finance to e-commerce and entertainment. The rise of smart devices, autonomous systems, and interactive AI-driven content is further fueling market growth.

Opportunities

The growing adoption of multimodal AI in healthcare presents a significant opportunity. AI-powered systems that analyze text-based patient records, medical images, and voice inputs can improve diagnostics and treatment planning.

Similarly, the education sector is witnessing a transformation as multimodal AI enhances personalized learning experiences through a combination of text, video, and voice-based content. Another key opportunity lies in the expansion of smart cities and IoT ecosystems, where multimodal AI is used for traffic monitoring, security surveillance, and automated assistance systems.

Challenges

Despite its potential, the multimodal AI market faces several challenges. The integration of multiple data types requires significant computational power and complex algorithms, making implementation costly. Data privacy and security concerns also pose risks, as multimodal AI systems often rely on vast amounts of sensitive user data.

Additionally, the lack of standardization in multimodal AI models across industries creates interoperability issues, limiting widespread adoption. Addressing biases in AI models and ensuring ethical AI deployment are further challenges that companies must navigate.

Regional Analysis

North America currently leads the multimodal AI market due to its strong AI research ecosystem, technological advancements, and high adoption rates across industries. The presence of major AI companies and investment in AI-driven innovations contribute to regional dominance. Asia Pacific is expected to witness the fastest growth, driven by increasing AI adoption in China, India, and Japan.

The region’s expanding digital economy, government initiatives, and rapid advancements in AI research create a favorable market landscape. Europe remains a key player, with a strong focus on AI regulations and ethical AI deployment, particularly in sectors like finance, healthcare, and automotive.

Recent Developments

- In December 2024, Google released Gemini 2.0 Flash as its new flagship AI model while updating other AI features and making the Gemini 2.0 Flash Thinking Experimental. The new model is available through Gemini app interfaces to expand its sophisticated AI reasoning capabilities.

- In December 2023, Alphabet Inc. unveiled its highly developed AI model, Gemini. This revolutionary system established a new benchmark by becoming the first to outshine human experts on the widely used Massive Multitask Language Understanding (MMLU) assessment metric.

- In October 2023, Reka launched Yasa-1 as its first multimodal AI assistant, which extends across text, image analysis, short video, and audio inputs. The Yasa-1 solution allows enterprises to modify their capabilities across various modalities of private datasets, resulting in innovative experiences for different use cases.

- In September 2023, Meta announced the launch of its smart glasses with multimodal AI capabilities that are able to gather environmental details through built-in cameras and microphones. Through its Ray-Ban smart glasses, the artificial assistant uses the voice command “Hey Meta,” which allows the assistant to observe and hear the surrounding events.

Multimodal AI Market Companies

- Amazon Web Services, Inc.

- Aimesoft

- Google LLC

- Jina AI GmbH

- IBM Corporation

- Meta.

- Microsoft

- OpenAI, L.L.C.

- Twelve Labs Inc.

- Uniphore Technologies Inc.

Segments Covered in the Report

By Component

- Software

- Services

By Data Modality

- Image Data

- Text Data

- Speech & Voice Data

- Video & Audio Data

By End-use

- Media & Entertainment

- BFSI

- IT & Telecommunication

- Healthcare

- Automotive & Transportation

- Gaming

- Others

By Enterprise Size

- Large Enterprises

- SMEs

By Region

- North America

- Europe

- Asia Pacific

- Latin America

- Middle East and Africa (MEA)

Ready for more? Dive into the full experience on our website@ https://www.precedenceresearch.com/